Show That if F X P X X is Continuous Then Y F X Has a Uniform Distribution on 0 1

For discrete random variables, the conditional probability mass function of given the occurrence of the value of can be written according to its definition as

Dividing by rescales the joint probability to the conditional probability, as in the diagram in the introduction. Since is in the denominator, this is defined only for non-zero (hence strictly positive) . Furthermore, since , it must be true that , and that they are only equal in the case where . In any other case, it is more likely that and if it is already known that than if that is not known.

The relation with the probability distribution of given is

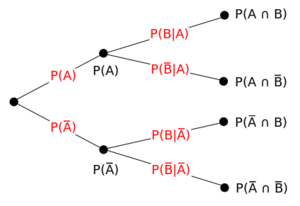

As a concrete example, the picture below shows a probability tree, breaking down the conditional distribution over the binary random variables and . The four nodes on the right-hand side are the four possible events in the space. The leftmost node has value one. The intermediate nodes each have a value equal to the sum of their children. The edge values are the nodes to their right divided by the nodes to their left. This reflects the idea that all probabilities are conditional. and are conditional on the assumptions of the whole probability space, which may be something like " and are the outcomes of flipping fair coins."

Horace either walks or runs to the bus stop. If he walks he catches the bus with probability . If he runs he catches it with probability . He walks to the bus stop with a probability of . Find the probability that Horace catches the bus.

Similarly, for continuous random variables, the conditional probability density function of given the occurrence of the value of can be written as

where gives the joint density of and , while gives the marginal density for . Also in this case it is necessary that . The relation with the probability distribution of given is given by

The concept of the conditional distribution of a continuous random variable is not as intuitive as it might seem: Borel's paradox shows that conditional probability density functions need not be invariant under coordinate transformations.

Conditional distributions and marginal distributions are related using Bayes' theorem, which is a simple consequence of the definition of conditional distributions in terms of joint distributions.

Bayes' theorem for discrete distributions states that

This can be interpreted as a rule for turning the marginal distribution into the conditional distribution by multiplying by the ratio . These functions are called the prior distribution, posterior distribution, and likelihood ratio, respectively.

For continuous distributions, a similar formula holds relating conditional densities to marginal densities:

Horace turns up at school either late or on time. He is then either shouted at or not. The probability that he turns up late is If he turns up late, the probability that he is shouted at is . If he turns up on time, the probability that he is still shouted at for no particular reason is .

You hear Horace being shouted at. What is the probability that he was late?

This problem is not original.

Two variables are independent if knowing the value of one gives no information about the other. More precisely, random variables and are independent if and only if the conditional distribution of given is, for all possible realizations of equal to the unconditional distribution of . For discrete random variables this means for all relevant and . For continuous random variables and , having a joint density function, it means for all relevant and .

A biased coin is tossed repeatedly. Assume that the outcomes of different tosses are independent and the probability of heads is for each toss. What is the probability of obtaining an even number of heads in 5 tosses?

Seen as a function of for given , is a probability, so the sum over all (or integral if it is a conditional probability density) is 1. Seen as a function of for given , it is a likelihood function, so that the sum over all need not be 1.

Let be a probability space, a -field in , and a real-valued random variable measurable with respect to the Borel -field on It can be shown that there exists a function such that is a probability measure on for each (i.e., it is regular) and (almost surely) for every . For any , the function is called a conditional probability distribution of given . In this case,

almost surely.

For any event , define the indicator function

which is a random variable. Note that the expectation of this random variable is equal to the probability of itself:

Then the conditional probability given is a function such that is the conditional expectation of the indicator function for :

In other words, is a -measurable function satisfying

A conditional probability is regular if is also a probability measure for all . An expectation of a random variable with respect to a regular conditional probability is equal to its conditional expectation.

-

For a trivial sigma algebra the conditional probability is a constant function, .

-

For , as outlined above,

Source: https://brilliant.org/wiki/conditional-probability-distribution/

0 Response to "Show That if F X P X X is Continuous Then Y F X Has a Uniform Distribution on 0 1"

Post a Comment